Building a Lightweight Agentic Workflow

Use Case For The Real World: Estimating Carbon Footprints Using AI (!)

TL;DR for Non-Technical Readers

We built a smart, interactive system to quickly estimate the carbon footprint of chemicals. Instead of filling out complicated forms, you can just type questions naturally (like asking the CO₂ emissions of shipping chemicals). The system uses advanced AI to understand your request, gathers the needed data (even chatting with you if necessary), and calculates accurate results transparently. This approach blends human-friendly interactions with reliable numbers, ensuring clear and trustworthy estimates.

Try it yourself! While this system is “under construction”, you can test the live prototype at https://agents.lyfx.ai. Expect some rough edges as we continue building and refining the workflow.

I have long learned that the gap between “we need to calculate something” and “we have a system that actually works” is often filled with more complexity than anyone initially expects. When we set out to build a quick first-order estimator for cradle-to-gate greenhouse gas emissions of any chemical, I thought: “How hard can it be? it is just a simple spreadsheet, right?”

Well, it turns out that when you want users to input free-form requests like “what is the CO₂ footprint of 50 tonnes of acetone shipped 200 km?” instead of filling out rigid forms, you need something smarter than a spreadsheet. Enter: agentic workflows.

I have experimented with various approaches to building agent workflows (from custom orchestration layers to other frameworks) but LangGraph has emerged as the most robust solution for this type of hybrid human-AI interaction. It handles state management, interrupts, and complex routing patterns with the kind of reliability you need when building something people will actually use.

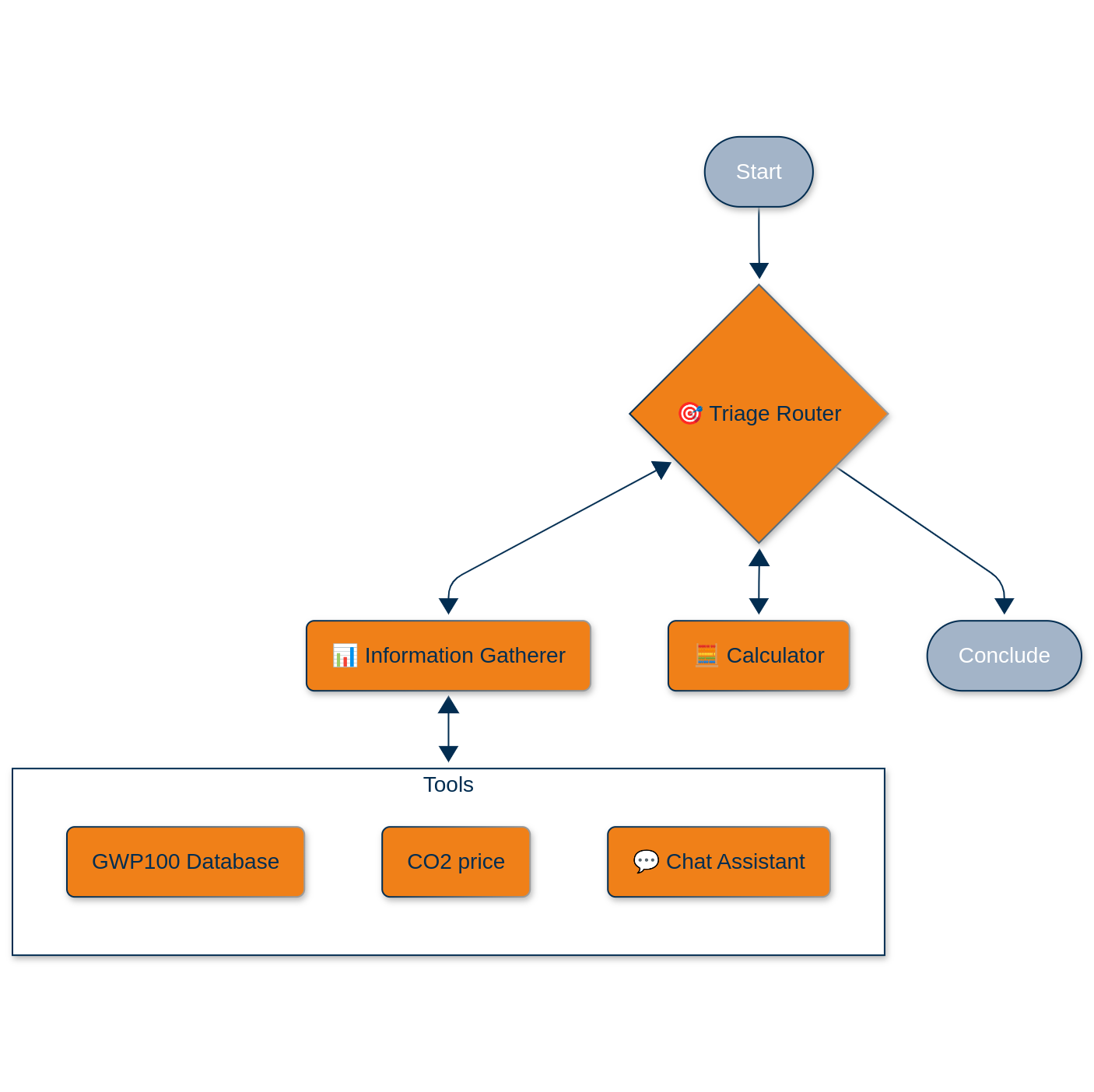

The Architecture: Orchestrated Chaos

Our system assumes a simplified life cycle: production at a single site, transport to point of use, and partial or total atmospheric release. But the magic happens in how we handle the messy human-AI interaction needed to gather the required parameters.

Here’s what we built using LangGraph as our orchestration engine, wrapped in a Django application served via uvicorn and nginx:

The Triage Router Agent

This is the conductor of our little orchestra. It uses OpenAI’s GPT-4o with structured outputs (Pydantic models, because type safety matters even in the age of LLMs) to classify incoming requests:

class TriageRouter(BaseModel):

reasoning: str = Field(description="Step-by-step reasoning behind the classification.")

classification: Literal["gather information", "calculate", "respond and conclude"]

response: str = Field(description="Response to user's request")The triage agent decides whether we need more information, are ready to calculate, or can conclude with an answer. it is essentially a state machine with an LLM brain.

The Information Gatherer Agent

This is where things get interesting. The agent is hybrid. It can either call tools programmatically or route to an interactive chat agent when it needs human input. The tools available are:

GWP Database Tool: Instead of maintaining a static lookup table, we built an LLM-powered “database” that searches through our chemical inventory with over 200 entries. When you ask for methane’s GWP-100, it does not just do string matching; it understands that “CH₄” and “methane” refer to the same molecule. The tool returns a classification (found/ambiguous/not available) plus the actual GWP value.

CO₂ Price Checker: Currently a placeholder returning 0.5 €/ton (we are building incrementally!), but designed to be swapped with a real-time API.

Interactive Chat Capability: When the information gatherer cannot get what it needs from tools, it seamlessly hands off to a chat agent. This is not just a simple handoff: we use LangGraph’s NodeInterrupt mechanism to pause the workflow, collect user input, then resume exactly where we left off.

The Calculator Agent

This is the only agent that does actual math, and deliberately so. it is a ReAct agent equipped with two calculation tools:

@tool

def chemicals_emission_calculator(

chemical_name: str,

annual_volume_ton: float,

production_footprint_per_ton: float,

transportation: list[dict],

release_to_atmosphere_ton_p_a: float,

gwp_100: float

) -> tuple[str, float]:The transportation parameter accepts a list of logistics steps: [{‘step’:’production to warehouse’, ‘distance_km’:50, ‘mode’:’road’}, {‘step’:’warehouse to port’, ‘distance_km’:250, ‘mode’:’rail’}]. Each mode has hardcoded emission factors (road: 0.00014, rail: 0.000015, ship: 0.000136, air: 0.0005 ton CO₂e per ton·km) sourced from EEA data.

The math is intentionally simple: sum up production emissions, transport emissions, and atmospheric release impacts, each calculated deterministically.

The Technical Stack: LangGraph + Django

Our system leverages LangGraph’s StateGraph along with a custom State class to maintain conversation context, collected data, and routing information as agents hand off to each other. During development, we rely on MemorySaver for in-memory persistence, but we’ll transition to SqliteSaver with disk-based checkpoints for production environments running multiple uvicorn workers.

Every agent delivers responses through Pydantic models, which provides type safety and mitigates the typical LLM hallucination problems around routing decisions. When the triage agent decides to “calculate,” it returns exactly that string rather than variations like “Calculate” or “time to calculate.”

The chat agent incorporates NodeInterrupt functionality to pause workflows when user input is required. The state tracks which agent initiated the chat through the caller_node field, ensuring proper routing after information collection. The entire workflow operates within a Django application, giving us the flexibility to add user authentication, data persistence, and API endpoints as requirements evolve. We serve everything through uvicorn for async capabilities and nginx for production reliability.

Several key features are still in development. Currently, when the system needs the GHG footprint per ton of a chemical’s production, it simply asks the user. This is a temporary solution while we build out a comprehensive database of production routes and their associated emissions. Think of it as a more sophisticated version of what SimaPro or GaBi offers, but specifically focused on chemicals and accessible through an API.

We’re also planning to integrate real-time data feeds for CO₂ prices, shipping routes, and chemical properties through live APIs. However, we’re prioritizing the orchestration layer first, then we’ll swap in these real data sources once the foundation is solid.

Another area for improvement is conversation memory. Each calculation currently starts from scratch, but adding session persistence to remember previous queries and build on them will be straightforward given our current architecture.

This architecture serves as an excellent starting point because it maintains clear separation of concerns. LLMs handle the messy human interaction and routing logic, while Python functions manage the deterministic calculations. This means domain experts can validate and modify the mathematical components without needing to touch the AI stack.

The workflows remain highly debuggable thanks to LangGraph’s state management, which lets you inspect exactly what each agent decided and why. When something goes wrong, you’re not stuck debugging a black box. The system also supports incremental complexity beautifully—you can start with hardcoded values, gradually add database lookups, and then integrate real-time APIs, all while keeping the core workflow structure intact.

Perhaps most importantly, every calculation step becomes auditable through LangSmith logging. When someone inevitably asks “where did that 142.7 tons CO₂e come from?”, you can show them the exact inputs and formula used, creating a complete audit trail from question to final result.

The Bigger Picture

This is not just about carbon footprints. The pattern – use LLMs for natural language understanding and workflow orchestration, but keep the critical calculations in deterministic code – applies to any domain where you need to mix soft reasoning with hard numbers.

Supply chain risk assessment? Same pattern. Financial modeling with regulatory compliance? Same pattern. Any time you find yourself thinking “we need a smart interface to our existing calculations,” this architecture gives you a starting point.

The future is hybrid intelligence, not just throwing everything at an LLM and hoping for the best.

Built in Python with LangGraph, OpenAI APIs, Django. Co-programmed using Claude Sonnet 3.7 and 4, Chat GPT o3 (not “vibe coded”). Currently in active development. Try it at https://agents.lyfx.ai.